Beyond Intelligent Agents: Embedding Artificial Intelligence

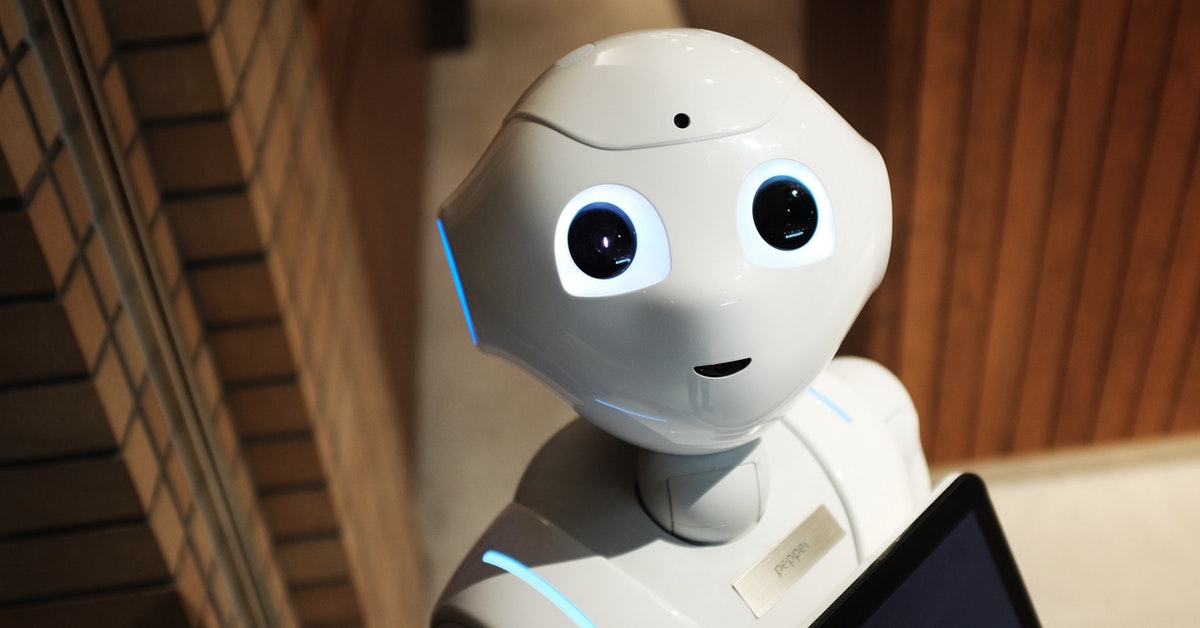

What if we say, your robot can respond to real-life situations? For example, when you take piano lessons, the fire alarm goes off.

Would you say this is ridiculous?

Facebook plans to develop robotic assistants that can meet our needs in different ways.

To be precise, Facebook is about to develop an “embodied artificial intelligence” – Facebook A.I. Research, the artificial intelligence research division. The alleged embedded AI is expected to surpass current AI voice interfaces, such as Google Assistant, Alexa and Siri, by performing tasks that allow them to work in a physical environment.

People have always thought of AI agents as unincorporated chatbots, and Facebook is here to prove that you are not. The focus now is to build a system that can function and find things as realistic as they do in the real world.

Incorporating artificial intelligence:

“Incorporated AI,” as the term implies, means working in the physical world. This includes stopping the system moving using a physical body and seeing how the body fits into a real-world scenario. Based on the theory of embodied cognition (the relationship between social interaction and decision making). The idea of embodied artificial intelligence seeks to understand whether intelligence is as part of the brain as the body.

Using this logic, AI researchers seek to build intelligent systems to improve their functionality.

Kristen Grauman is a professor of computer science at the University of Texas at Austin and a research scientist at Facebook A.I. Research tells digital trends,

“We’re far from those capabilities, but you can imagine scenarios like asking a home robot ‘Can you go and check if my laptop is on my desk? If so, bring it to me,'” Or there is the robot hears you somewhere upstairs and goes to investigate where it is and what it is. ”

While Facebook’s final goal is still a long way off, it made some progress in its development.

Facebook’s latest developments in embedded AI:

- Indoor Mapping Systems – This enables robots to navigate unexplored land.

- SoundSpaces – It’s an audio simulation tool that allows us to produce realistic audio renderings based on room geometry, materials, and more. This could help future AI assistants understand how sound works in the real world.

What’s next for embedded AI?

Facebook is doing more research than just developing a physical version of an intelligent AI assistant, but it could go well beyond that – perhaps with the ability to build more contextual and intelligent robots, even beyond Microsoft version of Clippy avatar.

For example, engineers want to ask questions to which AI robots can give accurate answers, such as “What was our dessert for Sunday night?” or “Where did I change my car keys?”

That means that Facebook engineers should make more effort to build embedded AI agents. Their focus should be on navigating from one place to another, developing and storing memories, planning what’s next, understanding gravity, and how they need to decode human activity.

Other collaborators on these projects include the University of Illinois, the University of Texas at Austin, Oregon State University and Georgia Tech, among others.

According to Dhruv Batra, professor at Georgia Tech School of Computing and Facebook’s AI research scientist. Research,

“Facebook AI is a leader in many subfields that incorporate artificial intelligence. It covers computer vision, language understanding, robotics, reinforcement learning, curiosity, and self-supervision, among others.” these sub-fields alone are significant achievements, and combining them in innovative ways enables us to develop the field of artificial intelligence. deeper. “Source: Digital Trends.

Tag:Embedding, Intelligence, Intelligent